7 Best Local AI Tools for Running Large Language Models

Unlock the power of local LLMs! Discover how they enhance privacy, accessibility, and customization while saving you money.

As you step into the world of large language models, you’ll find that running Local AI Tools locally offers many advantages, that cloud-based solutions can’t match.

Here’s why you should consider Local LLMs:

- Privacy: You get to keep full control over your data. This means your sensitive information stays within your environment and isn’t sent to external servers.

- Offline Accessibility: You can use LLMs even when you’re not connected to the internet. This is especially useful in situations where internet access is spotty or unreliable.

- Customization: You can fine-tune models to fit your specific tasks and preferences. This helps optimize performance for your unique needs.

- Cost-Effectiveness: By running LLMs locally, you can avoid ongoing subscription fees tied to cloud services, which can lead to significant savings over time.

Tools like LM Studio, Ollama, and AnythingLLM provide features such as local data storage, offline accessibility, and user-friendly interfaces. As you explore these options, you’ll discover that each tool has its unique strengths, such as GPT4All’s wide hardware support and Jan’s emphasis on privacy.

By taking advantage of local LLMs, you get more control over your data and models.

Now, without further delay, Let’s dig a little more in-depth look into the best local AI tools that can help you figure out which one matches your needs.

Get the Best Updates on SaaS, Tech, and AI

1. Ollama

Ollama revolutionizes the way we interact with Large Language Models (LLMs) right from your computer. This open-source tool allows you to download, manage, and run LLMs without requiring cloud services, which is a game changer for many developers and organizations.

Ollama creates a dedicated environment for each model, containing all the necessary components – including weights, configurations, and dependencies. This means you can run AI models locally without any hassle. It is also arguably the simplest Local AI Tools that works seamlessly with local API.

The system is user-friendly, offering both command line and graphical interfaces, and it works seamlessly on macOS, Linux, and Windows. You can easily access various models from Ollama’s library, such as Llama 3.3 for text tasks, Mistral for code generation, Code Llama for programming, LLaVA for image processing, and Phi-3 for scientific work. Each model operates in its own isolated environment, allowing you to switch between different AI tools effortlessly based on your specific needs.

Organizations that adopt Ollama have seen significant reductions in cloud costs while gaining better control over their data. This tool is perfect for powering local chatbots, conducting research, and developing AI applications that handle sensitive information. Developers can integrate Ollama with existing CMS and CRM systems, enhancing their capabilities while keeping all data on-site. By eliminating reliance on cloud services, teams can work offline and comply with privacy regulations like GDPR, all without sacrificing the functionality of the AI.

In short, if you’re looking to harness the power of the best local AI, while maintaining control and privacy, Ollama is a solid choice.

Key Features of Ollama:

- Local API Support: It’s pretty spectacular that you can call Ollama models from other applications as local API. It opens up plenty of possibilities, even integration with other tools, while keeping it private.

- Comprehensive Model Management: Ollama offers a complete system for downloading and managing versions of AI models, ensuring users can easily maintain and update their resources.

- User-Friendly Interfaces: The platform caters to diverse work styles with both command line and visual interfaces, making it accessible for users who prefer different modes of interaction.

- Cross-Platform Support: Ollama is designed to operate seamlessly across multiple platforms and operating systems, enhancing its versatility for various users.

- Isolated Environments: Each AI model runs in its own isolated environment, which helps in preventing conflicts and maintaining stability during development and testing.

- Business System Integration: Ollama features direct integration capabilities with existing business systems, streamlining workflows and enhancing productivity.

2. LM Studio — The Best Local AI That Does Not Need a Learning Curve

LM Studio is an essential desktop application for tech enthusiasts who want to harness the power of AI language models right on their machines. It provides a straightforward interface that allows you to find, download, and run models from Hugging Face while ensuring that all your data and processing remain local. Like Ollama, LM studio also one of the simplest and easy to use Local AI Tools.

Think of it as your personal AI workspace. With its built-in server, LM Studio mimics OpenAI’s API, allowing you to integrate local AI into any tool that supports OpenAI. It supports various major model types, including Llama 3.3, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5. You can easily drag and drop documents to interact with them using RAG (Retrieval Augmented Generation), and all document processing happens right on your machine.

I use this regularly and check out my coverage how I use it even with cloud tools with the local API.

You also have the flexibility to fine-tune how these models operate, including adjusting GPU usage and system prompts. However, keep in mind that running AI locally requires solid hardware. Make sure your computer has sufficient CPU power, RAM, and storage to manage these models effectively. Some users have reported performance slowdowns when running multiple models simultaneously, so plan accordingly.

For teams that prioritize data privacy, LM Studio eliminates any reliance on the cloud. It collects no user data and keeps all interactions offline. While it’s free for personal use, businesses will need to reach out to LM Studio directly for commercial licensing options. If you’re looking for a powerful, private, and local AI solution, LM Studio is definitely worth considering.

Notable Features of LM Studio:

- Seamless Model Discovery: Effortlessly explore and download models directly from Hugging Face, opening up a world of AI possibilities at your fingertips.

- Local AI Integration: Harness the power of an OpenAI-compatible API server, allowing you to integrate AI solutions into your local environment with ease.

- Interactive Document Chat: Engage in dynamic conversations with your documents using our advanced Retrieval-Augmented Generation (RAG) processing, making information retrieval more intuitive.

- Privacy-First Operation: Enjoy complete offline functionality with no data collection, ensuring your information remains secure and private.

- Customizable Model Configurations: Tailor your AI experience with fine-grained configuration options, allowing you to optimize models to meet your specific needs.

3. AnythingLLM

Ollama’s simplicity makes it a great choice for those who want to run large language models locally without fuss, but if you’re looking for a more feature-rich solution, AnythingLLM is worth considering. AnythingLLM, a super cool open-source AI app that brings the power of local LLMs (Large Language Models) right to your desktop. It’s totally free and designed to make it easy for you to chat with documents, run AI agents, and tackle various AI tasks—all while keeping your data safe and sound on your machine.

What really makes AnythingLLM shine is its flexible setup. It’s built using three main components: a sleek React-based interface for smooth user interaction, a Node.js Express server that handles the heavy lifting with vector databases and LLM communication, and a dedicated server for processing documents. You get to choose your favorite AI models, whether you want to run open-source options locally or connect to services like OpenAI, Azure, AWS, and more. Plus, it supports a wide range of document types—think PDFs, Word files, and even entire codebases—making it super versatile for whatever you need.

The best part? AnythingLLM really puts you controlling your data and privacy. Unlike cloud-based services that send your info off to external servers, AnythingLLM processes everything locally by default. For teams that require something more robust, there’s a Docker version that allows multiple users with custom permissions, all while keeping things secure. Organizations can save on those pesky API costs associated with cloud services by using free, open-source models instead.

Key Features of Anything LLM:

- Local Data Processing: Anything LLM prioritizes your privacy by processing all data directly on your machine, making it one of the best local AI, ensuring that sensitive information remains secure and under your control.

- Multi-Model Support: This platform offers a versatile framework that seamlessly connects to various AI providers, allowing users to leverage multiple models for enhanced functionality.

- Document Analysis Engine: With robust capabilities for handling PDFs, Word documents, and even code, the document analysis engine makes it easy to extract insights and manage information efficiently.

- Built-In AI Agents: Anything LLM features integrated AI agents designed to automate tasks and facilitate web interactions, streamlining workflows and boosting productivity.

- Developer API: For those looking to customize their experience, the developer API provides the tools necessary for creating unique integrations and extensions, allowing for tailored solutions that meet specific needs.

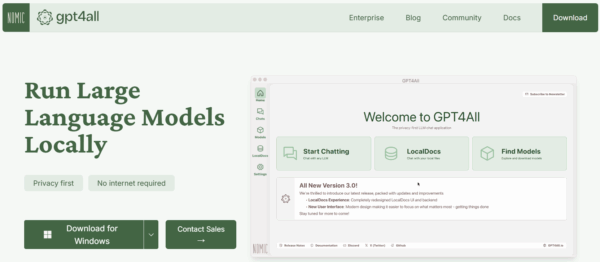

4. GPT4All

GPT4All brings a lot to the table. If you’re into tech, you’ll appreciate it. This platform lets you run large language models right on your device, meaning all the AI processing happens locally—no data is sent out into the ether. The free version is a treasure trove, providing you with access to over 1,000 open-source models, including popular ones like LLaMa and Mistral.

What’s great is that it works seamlessly on standard consumer hardware like Mac M Series, AMD, and NVIDIA setups. Plus, it doesn’t require an internet connection, making it perfect for offline projects. With the LocalDocs feature, you can dive into your personal files and create knowledge bases entirely on your machine. It’s flexible, too; it supports both CPU and GPU processing, so it can adapt to whatever hardware you have available.

For businesses, there’s an enterprise version available for $25 per device each month. This version includes additional features tailored for business needs, such as workflow automation with custom agents, integration with IT infrastructure, and direct support from Nomic AI, the developers behind the platform. The emphasis on local processing ensures that your company data stays secure within your organization, meeting all necessary security requirements while still leveraging powerful AI capabilities. If you value privacy and control, this is definitely worth considering.

Why You Should Consider GPT4All:

- Local Operation: GPT4All runs completely on your hardware. No need for a cloud connection, which means better privacy and control over your data.

- Diverse Language Models: Gain access to over 1,000 open-source language models. This variety allows you to choose the best fit for your specific needs and projects.

- Document Analysis Made Easy: With the built-in LocalDocs feature, you can efficiently analyze documents right from your local setup, streamlining your workflow.

- Fully Offline Capabilities: Enjoy the freedom of working offline. No internet dependency means you can keep working even in low-connectivity situations.

- Enterprise Ready: GPT4All comes equipped with deployment tools and support tailored for enterprise environments, making it a solid choice for businesses looking to integrate advanced language processing solutions.

5. Jan

While many local AI tools focus on performance or ease of use, Jan AI stands out by prioritizing your privacy. It creates a secure, isolated environment for your AI agents, ensuring that your data stays safe and sound.

Jan offers a free, open-source alternative to ChatGPT that runs entirely offline. You can download popular AI models like Llama 3, Gemma, and Mistral to run directly on your computer. Plus, if you ever need to tap into cloud services, like OpenAI or Anthropic, you can do that too.

What sets Jan apart is its focus on user control. It features a local Cortex server that mimics OpenAI’s API, allowing seamless integration with tools like Continue.dev and Open Interpreter. All your data is stored in a local “Jan Data Folder,” meaning it stays on your device unless you opt to use cloud services. Think of it like using VSCode or Obsidian—you can customize it to fit your needs.

Jan is compatible with Mac, Windows, and Linux, and it supports various GPUs, including NVIDIA (CUDA), AMD (Vulkan), and Intel Arc. This makes it one of the best local AI platforms is built around user ownership, with its code open-source under AGPLv3. This means you can inspect or modify it if it suits you. While it can share anonymous usage data, that’s entirely up to you. You get to choose which models to run, giving you full control over your data and interactions.

For those interested in community support, Jan has an active Discord channel and a GitHub repository where users can collaborate and influence the platform’s development. So if you’re looking for a powerful, privacy-focused AI tool, Jan is definitely worth a look.

Why you should consider Jan:

- Offline Functionality: Jan operates completely offline with a local model, ensuring you can work without an internet connection. This is perfect for secure environments or when you’re on the go.

- OpenAI-Compatible API: With the Cortex server, you can easily integrate Jan with OpenAI’s API. This means you can leverage existing tools and frameworks without any hassle.

- Versatile Model Support: Whether you prefer local processing or cloud-based solutions, Jan supports both. This flexibility allows you to choose the setup that best fits your workflow.

- Custom Extension System: Want to add unique features? Jan’s extension system lets you customize the platform to meet your specific needs, enhancing your productivity.

- Multi-GPU Support: Jan is compatible with major GPU manufacturers, enabling you to maximize your hardware capabilities for faster processing and improved performance.

6. NextChat

If you’re a tech enthusiast looking for more control over your AI interactions, NextChat is definitely worth your attention. This open-source app integrates the powerful features of ChatGPT while giving you the reins to manage everything yourself. Whether you’re using it on the web or your desktop, it connects seamlessly with major AI services like OpenAI, Google AI, and Claude—all while keeping your data stored locally in your browser.

What sets NextChat apart are the features that standard ChatGPT lacks. You can create “Masks,” which are like custom AI tools tailored to specific contexts and settings. This means you can personalize your AI experience to fit your needs. Plus, it automatically compresses chat history for those lengthy conversations, supports Markdown formatting for better text presentation, and streams responses in real-time. It’s also multilingual, supporting English, Chinese, Japanese, French, Spanish, and Italian.

Instead of shelling out for ChatGPT Pro, you can connect your API keys from OpenAI, Google, or Azure. You have the flexibility to deploy it for free on a cloud platform like Vercel for a private instance, or run it locally on your choice of Linux, Windows, or macOS. Additionally, the built-in prompt library and custom model support allow you to create specialized tools that fit your exact requirements.

Here’s a quick rundown of what NextChat offers:

- Access a built-in prompt library and templates for quick customization.

- Local data storage ensures your privacy with no external tracking.

- Create custom AI tools using Masks.

- Connect with multiple AI providers and APIs.

- One-click deployment on Vercel for easy setup.

7. Llamafile

This project from Mozilla Builders takes the power of llama.cpp and combines it with Cosmopolitan Libc to create executable files that let you run AI without the hassle of installation or setup.

Here’s how it works: Llamafile packages model weights as uncompressed ZIP archives, allowing direct access to your GPU. It automatically detects your CPU features at runtime to ensure optimal performance, whether you’re using Intel or AMD processors. Plus, it compiles GPU-specific components on the fly using your system’s compilers. This means it works on macOS, Windows, Linux, and BSD, supporting both AMD64 and ARM64 architectures.

Security is also a priority with Llamafile. It employs pledge() and SECCOMP to limit system access, keeping your environment safe. The tool is designed to be compatible with OpenAI’s API format, so you can easily integrate it into your existing projects. You can either embed the model weights directly into the executable or load them separately, which is especially handy for platforms with file size restrictions like Windows.

Here are some standout features of Llamafile:

- Deploy your AI models as single files with no external dependencies

- Built-in compatibility with the OpenAI API

- Direct GPU acceleration for Apple, NVIDIA, and AMD hardware

- Cross-platform support for all major operating systems

- Optimized runtime performance tailored to different CPU architectures

If you’re looking to simplify your AI deployment, Llamafile is definitely worth checking out.

Conclusion on Local AI Tools

Each tool out there has its set of features designed to meet different needs, but what you really want is a solution that combines privacy, customization, and cost-effectiveness.

Running AI models locally means your data stays on your machine, which is a big win for privacy. Plus, you can tweak these models to suit specific tasks, making them more effective for your projects. And let’s not forget about the cost – using local tools can save you from those pesky subscription fees that come with cloud services.

Here’s a quick rundown of some standout options:

- AnythingLLM is great for handling documents and has solid team features.

- GPT4All ensures compatibility with a wide range of hardware.

- Ollama is all about simplicity and ease of use.

- LM Studio offers deep customization options.

- Jan AI prioritizes your privacy above all.

- NextChat reimagines ChatGPT from scratch.

What unites these tools is a shared goal: to put powerful AI capabilities right at your fingertips without relying on the cloud. As hardware advances and these projects develop, local AI is not just a possibility; it’s becoming a practical choice.

So, which tool aligns with your needs? Whether you value privacy, performance, or simplicity, there’s something out there for you. Dive in and start experimenting!

FTC Disclosure: The pages you visit may have external affiliate links that may result in me getting a commission if you decide to buy the mentioned product. It gives a little encouragement to a smaller content creator like myself.

Leave a Reply