10 Tips How to Increase Google Crawl Rate of New Website

If a question “How to make Google index my site faster?” has crossed your mind at least once, time for learning some simple and useful tricks that will help in improving Google Crawl rate for your website.

Google crawl rate is the term denoting the number of requests per second a scanning bot sends to your website. These bots are also called “spiders” or “spiderbots.” They systematically roam the World Wide Web and browse site pages, looking for new things to index. Website owners cannot control this process. It is not possible to change crawls frequency. However, a smart strategy of publishing new content will help you to influence crawlers’ behavior.

Crawling is very significant for SEO. If bots don’t crawl effectively, many pages just won’t be indexed. Technically, crawling is the process when search engines follow some links for accessing new link. It is an easy way to get your new page noticed by bots and index them quickly, get your site link on popular sites by guest posting and leaving comments.

Google uses difficult algorithms that define the ultimate speed of scanning for every website. Website owners aim to process the biggest amount of pages at once without an extra load on a website. As usual, there are numerous things you can do to help search bots — pinging, sitemap submission, robots.txt file usage, improving site navigation. However, their efficiency depends on peculiarities of a particular web resource. It is necessary to use those measures in complex, not as a separate one-time improvement.

Both users and search bots get smarter. The primer look for the relevant information, the latter try to be more human-like with their requirements. If a question “How to make Google index my site faster?” has crossed your mind at least once, time for learning some simple and useful tricks.

Get the Best Updates on SaaS, Tech, and AI

#1. Update Content Regularly

Content updates help to keep the information on your website relevant, meeting the requirements and expectations of users, who land on your web platform looking for something. In this case, people are more likely to find and share your site.

Meanwhile, scanning bots will add it on their list of trustworthy sources. The more frequently you update the content, the more frequently crawlers notice your site. Content updates are recommended three times a week. The easiest way to do it is to start a blog or add audio and video materials. This is simpler and more efficient than constantly adding new pages.

#2. Use Web Hosting with Good Uptime

When Google bots visit a website at downtime, they make notes and use this experience to set a poor crawl rate later. It will be more difficult for users to find your site. If a site is down for too long, expect even worse results. This is why choosing a reliable server is crucial in the first place. Nowadays, many servers offer uptime for 99% of the time.

#3. Avoid Duplicate Content

If you want to outsmart Google’s crawlers and add duplicate content on new pages, just don’t. It is crucial to avoid duplicate content between websites or pages. Publishing quality information twice doesn’t double your success when it comes to website spider bots’ activity. On the contrary, both users and bots will get puzzled by identical information in different website sections.

Misunderstanding will not be the only negative thing. Search engines will lower site ranking or even ban your website. Make sure no links return identical content. There are numerous free content duplication resources for conducting this check.

#4. Optimize Page Load Time

Page load time is one of the defining factors in user experience. If a page loads more than five seconds, people are very likely to leave it and move to the next position on a search results list. Load time depends on how heavy a page is, and this is what website owners can control. It is necessary to get rid of all excessive scripts, heavy images, animations, PDFs, and similar files. Embedded video and audio files are an alternative, though they might be particularly problematic as well.

#5. Establish Sitemap

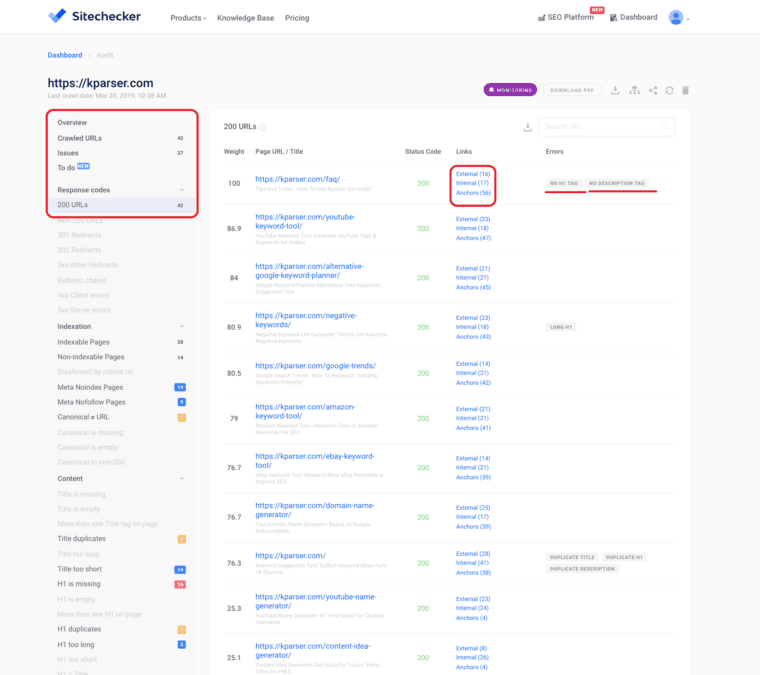

Actually, this is one of the first things you should do. For Google bots, a sitemap is a comprehensive list of followed links to your website pages. In a way, you link all the pages of your website to Google. This is an instruction for crawlers, where you indicate what should be indexed and what shouldn’t. A crawler becomes a website status checker of your resource. It is good to invite the spiders after considerable updates than wait for them to come. The same technology is used for most website crawler tools, the scripts which allow bots to scan particular sites provide information about their internal organization like internal linking, anchor texts, images, meta tags etc.

For example:

By submitting an XML sitemap, you introduce a website to Google’s scanning bots. They will do you a friendly favor with frequent crawls. Just remember that sitemap submission is a friendly invitation to crawl, not a request. Prepare everything and be ready for a visit, but also keep in mind that Google can ignore it. Many websites allow creating this file via CMS; WordPress provides numerous plugins. As a last resort, users can create a file manually by listing all the existing links.

#6. Obtain More Backlinks

Backlinks directly influence PageRank. Resources with high PageRank are crawled more frequently. It is better to remove links to low-quality sites and excessive paid links, as well as to avoid or get rid of black link building practices. You can find more information on backlinks in one of our previous guidelines. Check your backlinks regularly to keep them under control. You never know when a site that links to yours get banned or something like that.

#7. Add Meta and Title Tags

Meta tags and title tags are the first things search engines crawl when they land on your website. Prepare unique tags for different pages. Never use the duplicate ones. If crawlers notice pages with identical tags, they are likely to skip one of them. Don’t stuff titles with keywords — one per page will be enough. Remember to synchronize updates: if you change some keywords in the content, change them in titles as well. Meta tags are used for structuring the data about pages. They are capable of identifying a web page author, address, frequency of updates. They participate in creating titles for hypertext documents and influence how a page is displayed among the results.

#8. Optimize Images

Bots don’t read images directly. To improve the Googlebot crawl rate, website owners need to explain, what exactly spiderbots are looking at. For this, use alt tags — short word descriptions search engines will be able to index. Only optimized images are featured among search results and are able to bring you an extra amount of traffic. The same thing is relevant for videos: it is necessary to add textualized descriptions.

#9. Use Ping Services

It is one of the most effective and quick ways to show bots that some content on your website has been updated. There are numerous manual ping services that will help you to deal with this task by automatically notifying crawlers when some new content is published on your website.

#10. Website Monitoring Tools

Using Google Webmaster Tools will help you stay aware of the crawl rate and all the related stats. This data allows analyzing spiders’ activity and come up with the ultimate strategy for the improvement. Users can set crawl rates manually, though it doesn’t always help. You can view the current crawl rate, determine what is actually being crawled, which pages have not been indexed and for what reasons, and based on this data ask Google to recrawl some pages.

Final Thoughts

Now you know how to get Google to crawl your site faster. It is a complicated and time-consuming task, which requires constant monitoring and management. Nevertheless, after you set up everything once, it will be much easier to keep a check on crawlers’ journeys and to gain favor in sight.

Images are from Unplash.

FTC Disclosure: The pages you visit may have external affiliate links that may result in me getting a commission if you decide to buy the mentioned product. It gives a little encouragement to a smaller content creator like myself.